How to configure NGINX as a Reverse Proxy

Reverse proxy is one of the most widely deployed use case for an NGINX instance, providing a level of abstraction and control to ensure the smooth flow of network traffic between clients and servers.

This is the second in a series of guides to help you prepare to become an NGINX Super User. You can sign up for the NGINX Super User Challenge at the bottom of the page.

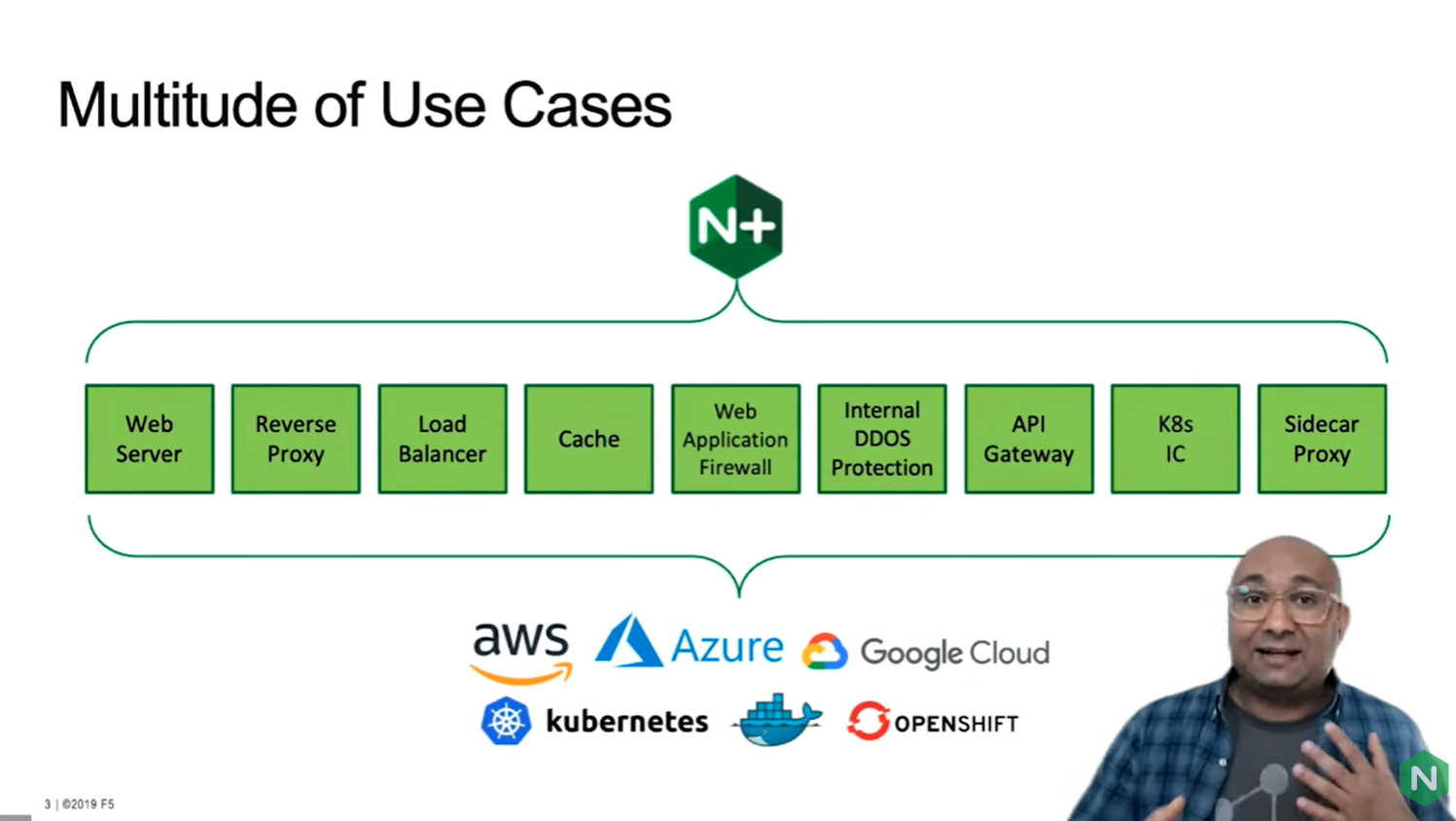

Web server, reverse proxy, load balancer and API Gateway are just a few of the use cases that can be met with NGINX's open source and NGINX+ servers. Here's how to locate and understand the configuration logic.

NGINX can run anywhere: on any cloud provider, bare metal, on your laptop. And NGINX runs exactly the same whether you as a developer are running it on your laptop or someone's running it in a cloud provider.

Topics covered in this guide:

We'll start with a high level understanding of a forward proxy and reverse proxy. Then we'll look at the proxy pass directive which nginx utilizes to forward the request to an upstream or a backend server. Then we'll show how to redefine the request headers to capture all the details of the original requester or client, and how to forward those details to the actual backend server via the proxy.

This guide shows how to:

- Understand the purpose of forward proxy and reverse proxy

- Create a proxy_pass directive

- Redefine Request Headers

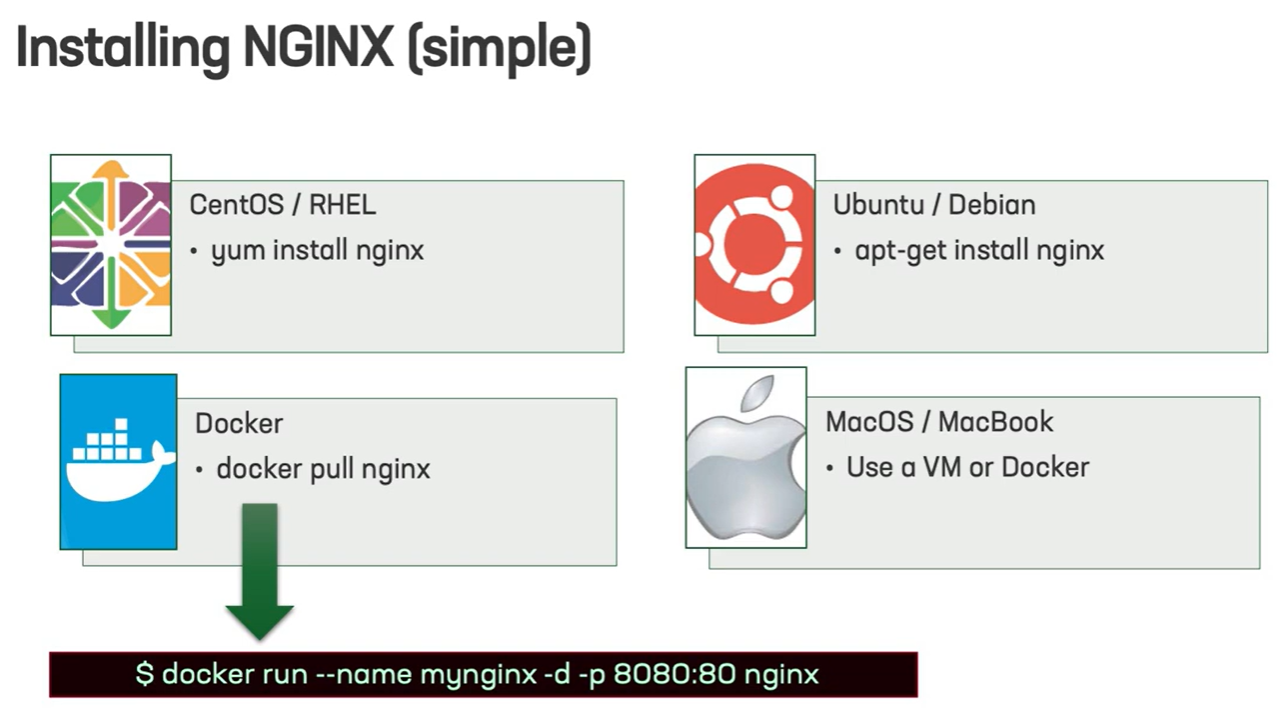

Want to install NGINX?

If you would like to follow along the demo, you can install NGINX on your own laptop, server or cloud provider using these methods. If you need help installing, click the image to watch the video "How to get started with NGINX"

What is a Reverse Proxy

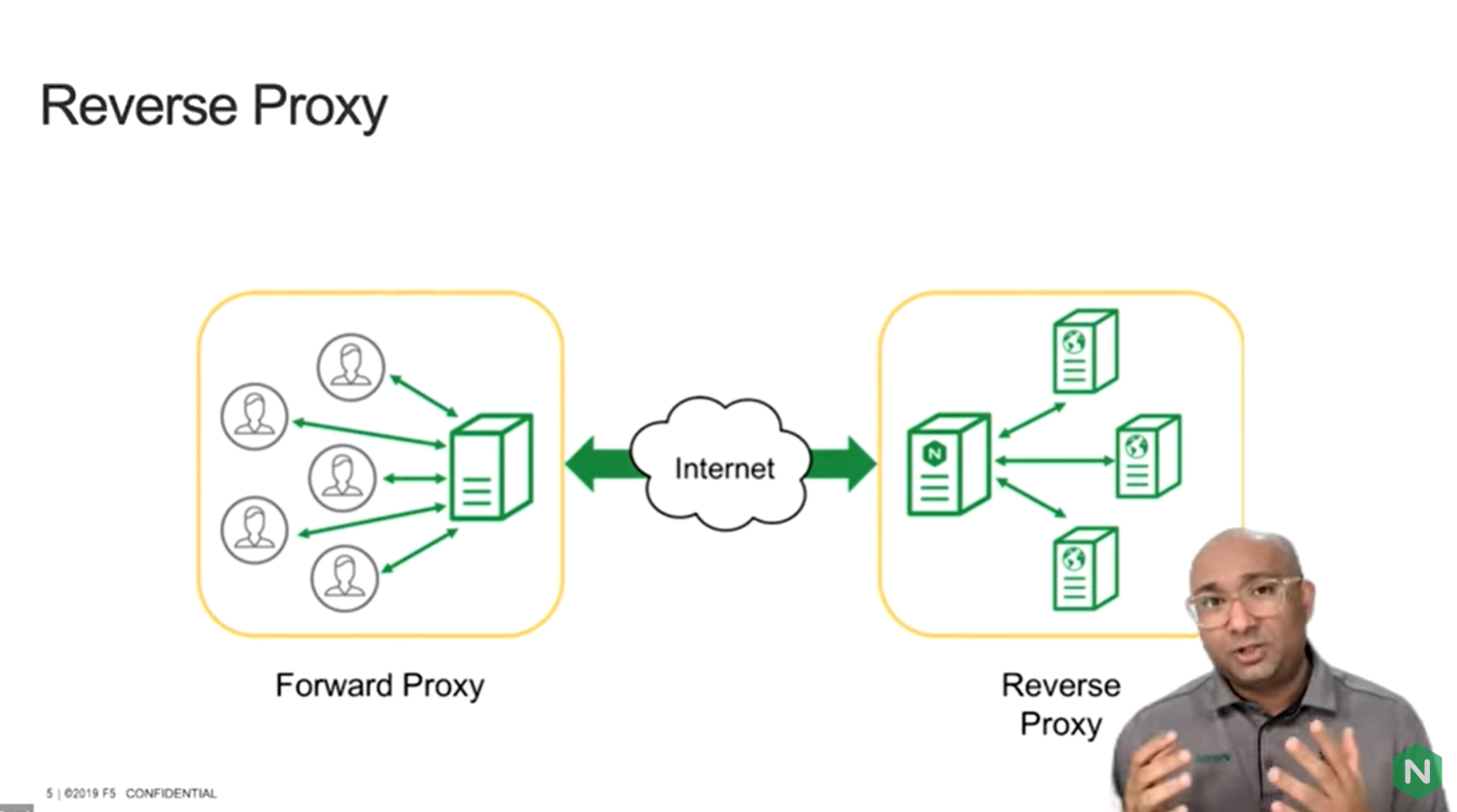

There are two ways to think about a proxy:

- A forward proxy is a client-side proxy which conceals the identity, or acts in place, of the clients; and

- A reverse proxy is a server-side proxy which conceals the

identity of the actual back-end application service, or at times acts in place of, these back-end application servers.

Organizations generally deploy NGINX as a reverse proxy, one of the most common use cases for NGINX instance.

Proxy Pass Directive:

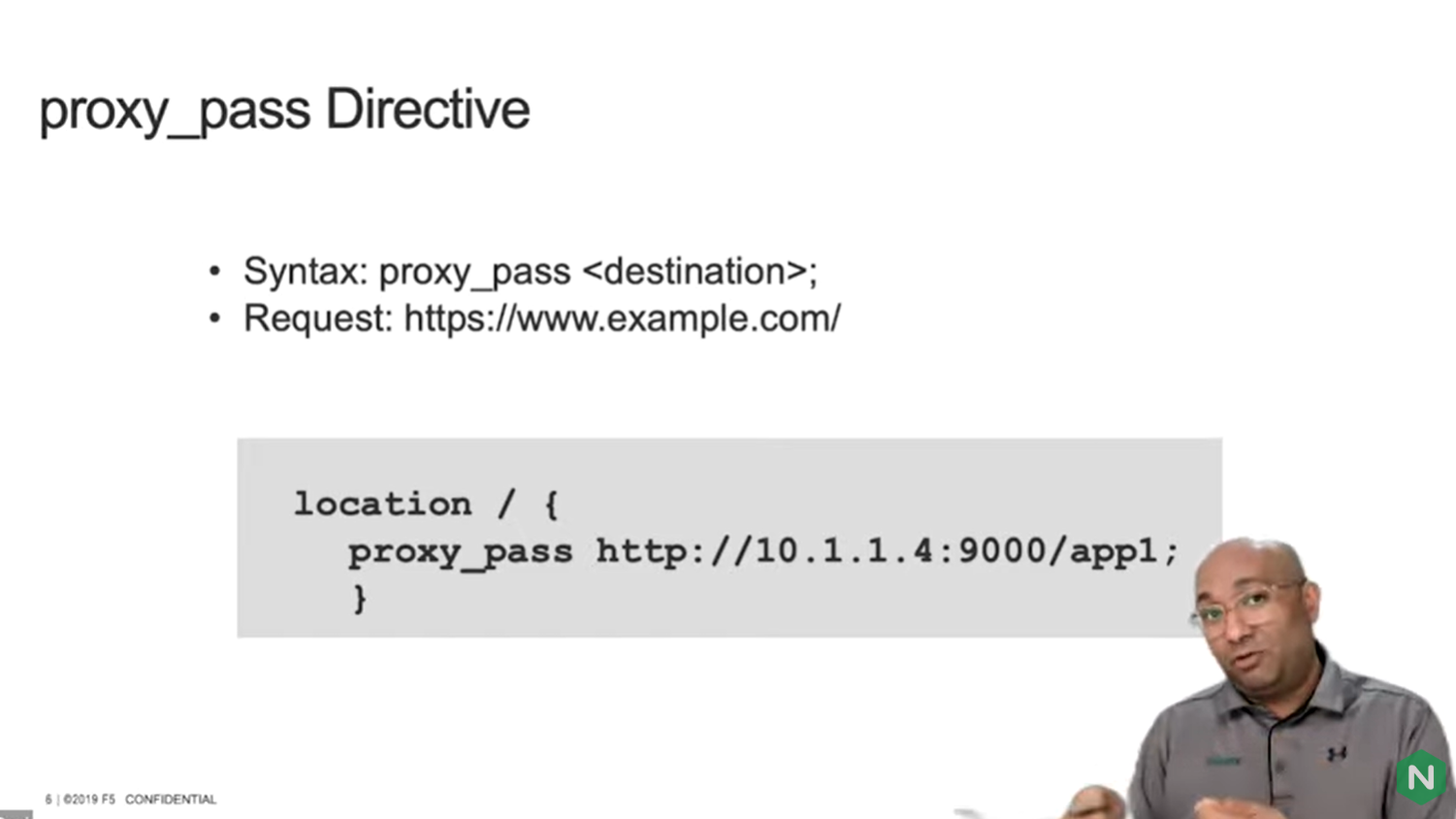

NGINX performs the role of a proxy by utilizing the proxy pass directive. The proxy pass directive moves an incoming request to a replacement destination at the back end.

The address can be a domain name, an ip address, port, unix socket, upstream name or even a set of variables.

The syntax for the proxy pass directive consists of proxy pass followed by a destination. It's generally utilized in a server and location context.

In the example above we have https.example.com. NGINX matches this specific request against the slash and forwards the request of the destination, which in this case is 10.1.1.4.

The destination ip address is most likely a web server or an application server sitting behind a firewall. Perhaps the ip address of the NGINX+ instance or NGINX instance which you see here would only be the ip address which would have access to the back-end application server.

So the clients connect to this reverse proxy, and the reverse proxy (which is your NGINX instance) has access to the back-end application server.

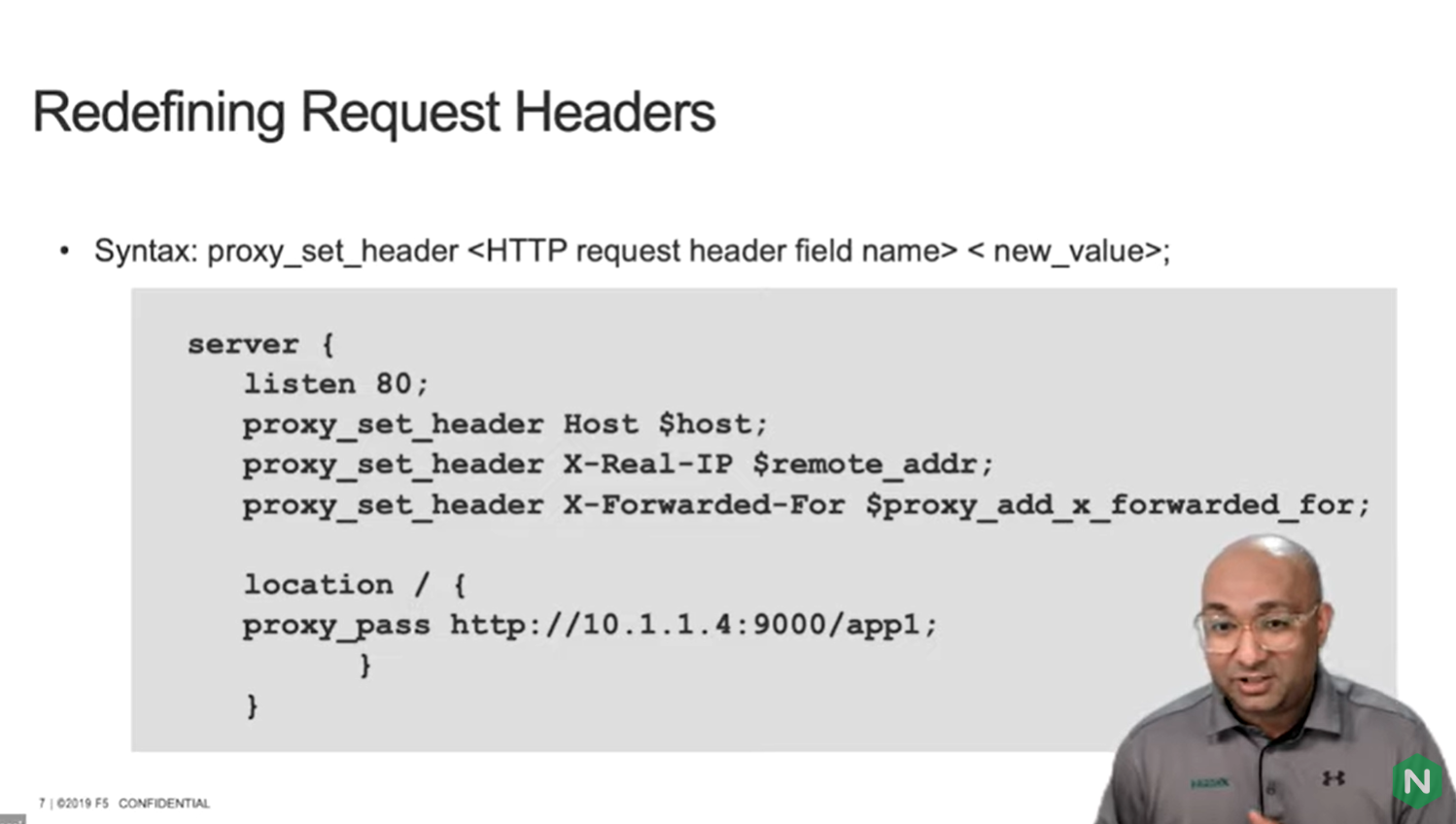

Redefining Request Headers

One thing we need to understand is the behavior of NGINX. NGINX's default behavior is to close the connection before it goes out and initiates a new connection to the back end. So in this process some of the original request information will be lost. For example, when an original request is made from a client or a browser from your laptop, it hits the reverse proxy, and that information gets sent to the backend servers. NGINX terminates that connection at that reverse proxy point.

So you want to try and ensure that you capture some of the details like the actual ip address of the original client, the host details that you are writing in the request. You want to capture that and forward it to the back end or the upstream application server.

The reason for this task is because the log files of the backend application server captures the request coming from the NGINX instance, and now if every single request has originated from the NGINX reverse proxy, to try and make sense of the data which you've collected at the back end, it's an impossible task. Every single request from that application server's perspective comes from the reverse proxy. You don't want that; you want to try and capture the original ip address and forward that back.

In the example above we show a directive called proxy_set_header. What this directive does is enable NGINX to redefine or rewrite the request header which comes in. In this case NGINX replaces the host header with the variable which is $host when it sends a request to the backend server.

In the second example a proxy_set_header captures the original ip address of the requester and forwards that to the backend application server, telling the backend application server that this is the ip of the original requester for this request.

The final proxy_set_header that you see here creates a list of various addresses that the request has actually traversed through before it hits the backend application server.

In some of the cases you probably have a couple of web servers, a couple of proxies, before the actual request hits the backend application server.

In a scenario like that NGINX would go out and collate all those ips and send that information to the actual backend application server.

Putting it all together

In this guide, we covered how to:

- Understand the purpose of forward proxy and reverse proxy

- Create a proxy_pass directive

- Redefine Request Headers

Want to watch the full guide?

In this video, NGINX's Senior Solution Engineer Jay Desai explains reverse proxy, proxy pass directive and redefining request headers. He then shows a live demo of NGINX acting as a reverse proxy and a web server.

Documentation and code for this guide is available at: https://github.com/jay-nginx/reverse-proxy

This has been the second in our series of guides to help you prepare to become an NGINX Super User.

You can see the first guide, Understanding NGINX Configuration Context Logic, at: https://apiconnections.co/blog/2022-05-31-nginx-super-user-config/

In subsequent guides, we will cover configuring NGINX as a:

- Load Balancer

- Instance Manager

- API Gateway

- Kubernetes Ingress Controller

- Web Application Firewall (NGINX App Protect)

Have you signed up for the NGINX Super User Challenge?

Are you a full stack developer, engineer or API designer? Do you know how to build an API Gateway, load balancer or reverse proxy?

Become an NGINX Super User to showcase your knowledge and expertise.

Learn more at: https://apiconnections.co/blog/2022-05-25-nginx-super-user-challenge